改写港科大VINS-Mono系统到ROS2版本,出现只能调用一个节点的问题

-

因为实验室的工作需要,最近我在尝试把VINS-Mono( https://github.com/HKUST-Aerial-Robotics/VINS-Mono )改写成ROS2(我用的是foxy)版本。目前用colcon build编译都已经通过,但是在使用ros2 run命令运行的时候发现不能像源程序一样多线程运行。VINS-Mono主要包含三个线程(vins_estimator, feature_tracker, pose_graph),我发现用ros2 run命令运行的时候分别调用三个节点它们会各自正常运行,但是正常情况下应该是运行vins_estimator_node可以调用起其他两个节点。下面是一些节点间通信的主要代码。求大神告诉我是哪里出了问题

estimator_node.cpp

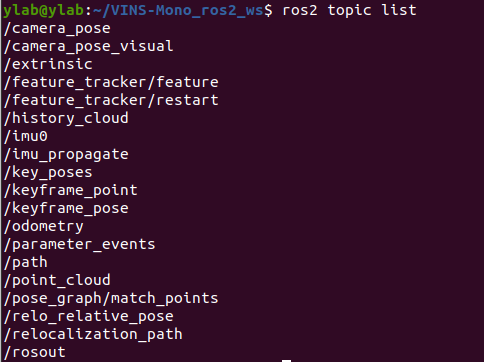

... int main(int argc, char **argv) { rclcpp::init(argc, argv); auto n = rclcpp::Node::make_shared("vins_estimator"); string config_file = argv[1]; printf("config_file : %s\n", argv[1]); readParameters(config_file); estimator.setParameter(); #ifdef EIGEN_DONT_PARALLELIZE ROS_DEBUG("EIGEN_DONT_PARALLELIZE"); #endif ROS_WARN("waiting for image and imu..."); registerPub(n); auto sub_imu = n->create_subscription<sensor_msgs::msg::Imu>(IMU_TOPIC, rclcpp::QoS(rclcpp::KeepLast(2000)), imu_callback); auto sub_image = n->create_subscription<sensor_msgs::msg::PointCloud>("/feature_tracker/feature", rclcpp::QoS(rclcpp::KeepLast(2000)),feature_callback); auto sub_restart = n->create_subscription<std_msgs::msg::Bool>("/feature_tracker/restart", rclcpp::QoS(rclcpp::KeepLast(100)), restart_callback); auto sub_relo_points = n->create_subscription<sensor_msgs::msg::PointCloud>("/pose_graph/match_points", rclcpp::QoS(rclcpp::KeepLast(100)), relocalization_callback); std::thread measurement_process{process}; rclcpp::spin(n); return 0; }以下是运行vins estimator node时的话题输出:

feature_tracker_node.cpp

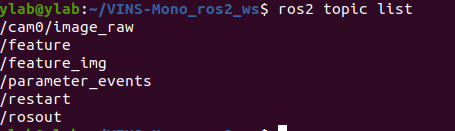

... int main(int argc, char **argv) { rclcpp::init(argc, argv); auto n = rclcpp::Node::make_shared("feature_tracker"); //ros::console::set_logger_level(ROSCONSOLE_DEFAULT_NAME, ros::console::levels::Info); string config_file = argv[1]; printf("config_file : %s\n", config_file); readParameters(config_file); for (int i = 0; i < NUM_OF_CAM; i++) trackerData[i].readIntrinsicParameter(CAM_NAMES[i]); if(FISHEYE) { for (int i = 0; i < NUM_OF_CAM; i++) { trackerData[i].fisheye_mask = cv::imread(FISHEYE_MASK, 0); if(!trackerData[i].fisheye_mask.data) { ROS_INFO("load mask fail"); //ROS_BREAK(); } else ROS_INFO("load mask success"); } } auto sub_img = n->create_subscription<sensor_msgs::msg::Image>(IMAGE_TOPIC, rclcpp::QoS(rclcpp::KeepLast(100)), img_callback); pub_img = n->create_publisher<sensor_msgs::msg::PointCloud>("feature", 1000); pub_match = n->create_publisher<sensor_msgs::msg::Image>("feature_img",1000); pub_restart = n->create_publisher<std_msgs::msg::Bool>("restart",1000); /* if (SHOW_TRACK) cv::namedWindow("vis", cv::WINDOW_NORMAL); */ rclcpp::spin(n); return 0; } 以下是单独运行feature_tracker节点时的话题输出信息: pose_graph_node.cpp

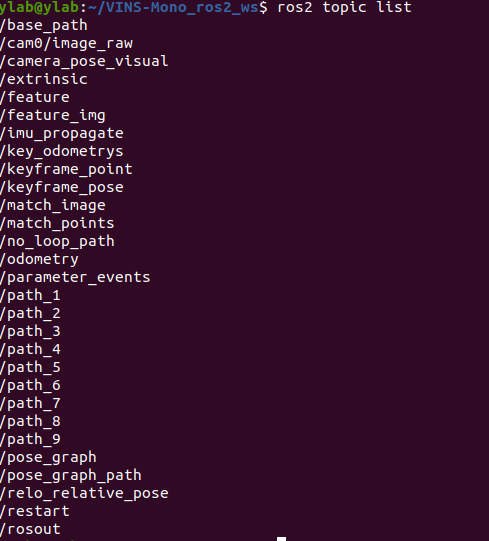

int main(int argc, char **argv) { rclcpp::init(argc, argv); auto n = rclcpp::Node::make_shared("pose_graph"); posegraph.registerPub(n); std::string config_file = argv[1]; printf("config_file : %s\n", argv[1]); //n->get_parameter("config_file", config_file); cv::FileStorage fsSettings(config_file, cv::FileStorage::READ); if(!fsSettings.isOpened()) { std::cerr << "ERROR: Wrong path to settings" << std::endl; } double camera_visual_size = fsSettings["visualize_camera_size"]; cameraposevisual.setScale(camera_visual_size); cameraposevisual.setLineWidth(camera_visual_size / 10.0); LOOP_CLOSURE = fsSettings["loop_closure"]; std::string IMAGE_TOPIC; int LOAD_PREVIOUS_POSE_GRAPH; // read param n->get_parameter("visualization_shift_x", VISUALIZATION_SHIFT_X); n->get_parameter("visualization_shift_y", VISUALIZATION_SHIFT_Y); n->get_parameter("skip_cnt", SKIP_CNT); n->get_parameter("skip_dis", SKIP_DIS); if (LOOP_CLOSURE) { ROW = fsSettings["image_height"]; COL = fsSettings["image_width"]; //std::string pkg_path = ament_index_cpp::get_package_share_directory("pose_graph"); std::string pkg_path = "/home/ylab/VINS-Mono_ros2_ws/src/VINS-Mono/pose_graph"; std::cout << "pkg_path:" << pkg_path << std::endl; string vocabulary_file = pkg_path + "/../support_files/brief_k10L6.bin"; std::cout << "vocabulary_file: " << vocabulary_file << std::endl; posegraph.loadVocabulary(vocabulary_file); BRIEF_PATTERN_FILE = pkg_path + "/../support_files/brief_pattern.yml"; std::cout << "BRIEF_PATTERN_FILE" << BRIEF_PATTERN_FILE << std::endl; m_camera = camodocal::CameraFactory::instance()->generateCameraFromYamlFile(config_file.c_str()); fsSettings["image_topic"] >> IMAGE_TOPIC; fsSettings["pose_graph_save_path"] >> POSE_GRAPH_SAVE_PATH; fsSettings["output_path"] >> VINS_RESULT_PATH; fsSettings["save_image"] >> DEBUG_IMAGE; // create folder if not exists FileSystemHelper::createDirectoryIfNotExists(POSE_GRAPH_SAVE_PATH.c_str()); FileSystemHelper::createDirectoryIfNotExists(VINS_RESULT_PATH.c_str()); VISUALIZE_IMU_FORWARD = fsSettings["visualize_imu_forward"]; LOAD_PREVIOUS_POSE_GRAPH = fsSettings["load_previous_pose_graph"]; FAST_RELOCALIZATION = fsSettings["fast_relocalization"]; VINS_RESULT_PATH = VINS_RESULT_PATH + "/vins_result_loop.csv"; std::ofstream fout(VINS_RESULT_PATH, std::ios::out); fout.close(); fsSettings.release(); if (LOAD_PREVIOUS_POSE_GRAPH) //加载先前的位姿图 { printf("load pose graph\n"); m_process.lock(); posegraph.loadPoseGraph(); m_process.unlock(); printf("load pose graph finish\n"); load_flag = 1; } else { printf("no previous pose graph\n"); load_flag = 1; } } fsSettings.release(); //订阅topic并执行各自回调函数 auto sub_imu_forward = n->create_subscription<nav_msgs::msg::Odometry>("/imu_propagate", rclcpp::QoS(rclcpp::KeepLast(2000)), imu_forward_callback); auto sub_vio = n->create_subscription<nav_msgs::msg::Odometry>("/odometry", rclcpp::QoS(rclcpp::KeepLast(2000)), vio_callback); auto sub_image = n->create_subscription<sensor_msgs::msg::Image>(IMAGE_TOPIC, rclcpp::QoS(rclcpp::KeepLast(2000)), image_callback); auto sub_pose = n->create_subscription<nav_msgs::msg::Odometry>("/keyframe_pose", rclcpp::QoS(rclcpp::KeepLast(2000)), pose_callback); auto sub_extrinsic = n->create_subscription<nav_msgs::msg::Odometry>("/extrinsic", rclcpp::QoS(rclcpp::KeepLast(2000)), extrinsic_callback); auto sub_point = n->create_subscription<sensor_msgs::msg::PointCloud>("/keyframe_point", rclcpp::QoS(rclcpp::KeepLast(2000)), point_callback); auto sub_relo_relative_pose = n->create_subscription<nav_msgs::msg::Odometry>("/relo_relative_pose", rclcpp::QoS(rclcpp::KeepLast(2000)), relo_relative_pose_callback); pub_match_img = n->create_publisher<sensor_msgs::msg::Image>("match_image", 1000); pub_camera_pose_visual = n->create_publisher<visualization_msgs::msg::MarkerArray>("camera_pose_visual", 1000); pub_key_odometrys = n->create_publisher<visualization_msgs::msg::Marker>("key_odometrys", 1000); pub_vio_path = n->create_publisher<nav_msgs::msg::Path>("no_loop_path", 1000); pub_match_points = n->create_publisher<sensor_msgs::msg::PointCloud>("match_points", 1000); std::thread measurement_process; std::thread keyboard_command_process; measurement_process = std::thread(process); keyboard_command_process = std::thread(command); rclcpp::spin(n); return 0; }以下是单独运行pose_graph节点时输出的话题:

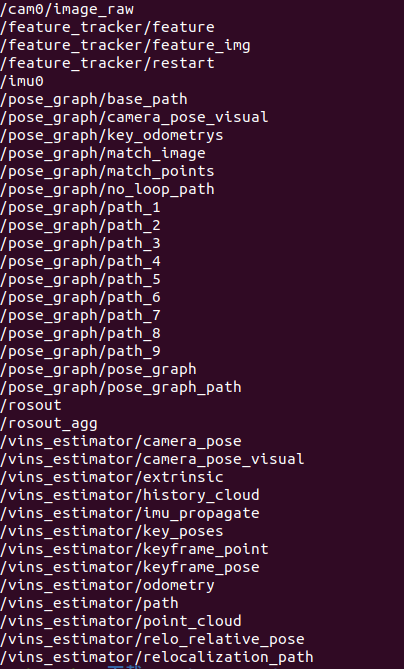

以下是在ROS1里正常运行(没有play bag的时候)输出的所有话题:

-

@1056481492 在 改写港科大VINS-Mono系统到ROS2版本,出现只能调用一个节点的问题 中说:

运行vins_estimator_node可以调用起其他两个节点

详细说说怎么调起来的,既然可以单独运行,写个launch就好了

-

@小鱼 谢谢大神回复,我是用

ros2 run vins_estimator vins_estimator_node + "path/to/config/file"运行起来的,另外两个节点也都是用ros2 run 命令。因为是从ros1迁移到ros2,我也尝试过按教程把.launch变成.launch.xml,但是发现并没法正常运行……于是我用了github上这个把ros1的launch文件转换成.py文件的代码(https://github.com/aws-robotics/ros2-launch-file-migrator ),但是会报错,我运行的语句为:

ros2 launch vins_estimator euroc.launch.pyeuroc.launch.py文件内容如下:

import os import sys import launch import launch_ros.actions from ament_index_python.packages import get_package_share_directory def generate_launch_description(): ld = launch.LaunchDescription([ launch.actions.DeclareLaunchArgument( name='config_path', default_value=get_package_share_directory( 'feature_tracker') + '/../config/euroc/euroc_config.yaml' ), launch.actions.DeclareLaunchArgument( name='vins_path', default_value=get_package_share_directory( 'feature_tracker') + '/../config/../' ), launch_ros.actions.Node( package='feature_tracker', executable='feature_tracker', name='feature_tracker', output='log', parameters=[ { 'config_file': launch.substitutions.LaunchConfiguration('config_path') }, { 'vins_folder': launch.substitutions.LaunchConfiguration('vins_path') } ] ), launch_ros.actions.Node( package='vins_estimator', executable='vins_estimator_node', name='vins_estimator', output='screen', parameters=[ { 'config_file': launch.substitutions.LaunchConfiguration('config_path') }, { 'vins_folder': launch.substitutions.LaunchConfiguration('vins_path') } ] ), launch_ros.actions.Node( package='pose_graph', executable='pose_graph', name='pose_graph', output='screen', parameters=[ { 'config_file': launch.substitutions.LaunchConfiguration('config_path') }, { 'visualization_shift_x': '0' }, { 'visualization_shift_y': '0' }, { 'skip_cnt': '0' }, { 'skip_dis': '0' } ] ) ]) return ld if __name__ == '__main__': generate_launch_description()报错的内容如下,看起来是没有正确读到配置文件,可能还有OpenCV版本的问题?我不太明白,我电脑中的环境应该是OpenCV3.2.0:

[INFO] [launch]: All log files can be found below /home/ylab/.ros/log/2023-08-18-16-30-57-099132-ylab-448601 [INFO] [launch]: Default logging verbosity is set to INFO [INFO] [feature_tracker-1]: process started with pid [448603] [INFO] [vins_estimator_node-2]: process started with pid [448605] [INFO] [pose_graph-3]: process started with pid [448607] [vins_estimator_node-2] [INFO] [1692347457.201213834] []: init begins [vins_estimator_node-2] failed to load config_file [vins_estimator_node-2] OpenCV Error: Null pointer (NULL or empty buffer) in cvOpenFileStorage, file /home/ylab/Software/opencv-3.2.0/modules/core/src/persistence.cpp, line 4153 [vins_estimator_node-2] terminate called after throwing an instance of 'cv::Exception' [vins_estimator_node-2] what(): /home/ylab/Software/opencv-3.2.0/modules/core/src/persistence.cpp:4153: error: (-27) NULL or empty buffer in function cvOpenFileStorage [vins_estimator_node-2] [pose_graph-3] OpenCV Error: Null pointer (NULL or empty buffer) in cvOpenFileStorage, file /home/ylab/Software/opencv-3.2.0/modules/core/src/persistence.cpp, line 4153 [pose_graph-3] terminate called after throwing an instance of 'cv::Exception' [pose_graph-3] what(): /home/ylab/Software/opencv-3.2.0/modules/core/src/persistence.cpp:4153: error: (-27) NULL or empty buffer in function cvOpenFileStorage [pose_graph-3] [feature_tracker-1] OpenCV Error: Null pointer (NULL or empty buffer) in cvOpenFileStorage, file /home/ylab/Software/opencv-3.2.0/modules/core/src/persistence.cpp, line 4153 [feature_tracker-1] terminate called after throwing an instance of 'cv::Exception' [feature_tracker-1] what(): /home/ylab/Software/opencv-3.2.0/modules/core/src/persistence.cpp:4153: error: (-27) NULL or empty buffer in function cvOpenFileStorage [feature_tracker-1] [ERROR] [vins_estimator_node-2]: process has died [pid 448605, exit code -6, cmd '/home/ylab/VINS-Mono_ros2_ws/install/vins_estimator/lib/vins_estimator/vins_estimator_node --ros-args -r __node:=vins_estimator --params-file /tmp/launch_params_8lbip8z5 --params-file /tmp/launch_params_k8kni6r9']. [ERROR] [pose_graph-3]: process has died [pid 448607, exit code -6, cmd '/home/ylab/VINS-Mono_ros2_ws/install/pose_graph/lib/pose_graph/pose_graph --ros-args -r __node:=pose_graph --params-file /tmp/launch_params_385zd2mg --params-file /tmp/launch_params_yjtb9c85 --params-file /tmp/launch_params_68jd396d --params-file /tmp/launch_params_362ai_1z --params-file /tmp/launch_params_oazpjw8i']. [ERROR] [feature_tracker-1]: process has died [pid 448603, exit code -6, cmd '/home/ylab/VINS-Mono_ros2_ws/install/feature_tracker/lib/feature_tracker/feature_tracker --ros-args -r __node:=feature_tracker --params-file /tmp/launch_params_hosogd95 --params-file /tmp/launch_params_blsew764']. 请大神指教

请大神指教 -

@1056481492 在 改写港科大VINS-Mono系统到ROS2版本,出现只能调用一个节点的问题 中说:

failed to load config_file

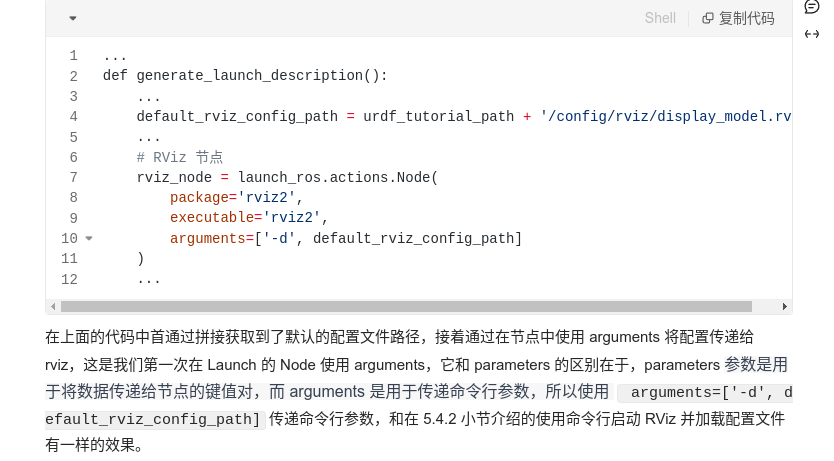

看起来是参数没有被正确传递

@1056481492 在 改写港科大VINS-Mono系统到ROS2版本,出现只能调用一个节点的问题 中说:

parameters

应该改为 arguments

-

@小鱼 谢谢大神回复,我按照您说的改了之后还是没法读取yaml文件,这几天我又改了几个版本的launch.py文件,发现都无法读取配置文件。我思考了一下,感觉是yaml文件直接用了ros1中格式的问题,不知道这个方向是否正确?

以下是我改写的readParameter函数,其中ros1和ros2不一样的地方是由getParam()函数变成了get_parameter()函数,不知是否是这个原因。... template <typename T> T readParam(rclcpp::Node::SharedPtr n, std::string name) { T ans; if (n->get_parameter(name, ans)) { //ROS_INFO("Loaded " << name << ": " << ans); std::cout<<"Loaded "<< name << ": " << ans << std::endl; } else { //ROS_ERROR_STREAM("Failed to load " << name); std::cout<< "failed to load " << name <<std::endl;//终端输出该语句后shutdown std::cout << "ans=" << ans << std::endl;//目前调试的结果来看ans输出为空 rclcpp::shutdown(); } return ans; } void readParameters(rclcpp::Node::SharedPtr n)//std::string config_file)// { /* FILE *fh = fopen(config_file.c_str(),"r"); if(fh == NULL){ ROS_WARN("config_file doesn't exist; wrong config_file path"); return; } fclose(fh); */ std::string config_file; config_file = readParam<std::string>(n, "config_file"); cv::FileStorage fsSettings(config_file, cv::FileStorage::READ); if(!fsSettings.isOpened()) { std::cerr << "ERROR: Wrong path to settings" << std::endl; } fsSettings["imu_topic"] >> IMU_TOPIC; std::cout << "imu topic: " << IMU_TOPIC << std::endl; SOLVER_TIME = fsSettings["max_solver_time"]; NUM_ITERATIONS = fsSettings["max_num_iterations"]; MIN_PARALLAX = fsSettings["keyframe_parallax"]; MIN_PARALLAX = MIN_PARALLAX / FOCAL_LENGTH; std::string OUTPUT_PATH; fsSettings["output_path"] >> OUTPUT_PATH; VINS_RESULT_PATH = OUTPUT_PATH + "/vins_result_no_loop.csv"; std::cout << "result path " << VINS_RESULT_PATH << std::endl; // create folder if not exists FileSystemHelper::createDirectoryIfNotExists(OUTPUT_PATH.c_str()); std::ofstream fout(VINS_RESULT_PATH, std::ios::out); fout.close(); ACC_N = fsSettings["acc_n"]; ACC_W = fsSettings["acc_w"]; GYR_N = fsSettings["gyr_n"]; GYR_W = fsSettings["gyr_w"]; G.z() = fsSettings["g_norm"]; ROW = fsSettings["image_height"]; COL = fsSettings["image_width"]; ROS_INFO("ROW: %f COL: %f ", ROW, COL); ROS_INFO("acc_n: %f acc_w: %f ", ACC_N, ACC_W); ESTIMATE_EXTRINSIC = fsSettings["estimate_extrinsic"]; if (ESTIMATE_EXTRINSIC == 2) { ROS_WARN("have no prior about extrinsic param, calibrate extrinsic param"); RIC.push_back(Eigen::Matrix3d::Identity()); TIC.push_back(Eigen::Vector3d::Zero()); EX_CALIB_RESULT_PATH = OUTPUT_PATH + "/extrinsic_parameter.csv"; } else { if ( ESTIMATE_EXTRINSIC == 1) { ROS_WARN(" Optimize extrinsic param around initial guess!"); EX_CALIB_RESULT_PATH = OUTPUT_PATH + "/extrinsic_parameter.csv"; } if (ESTIMATE_EXTRINSIC == 0) ROS_WARN(" fix extrinsic param "); cv::Mat cv_R, cv_T; fsSettings["extrinsicRotation"] >> cv_R; fsSettings["extrinsicTranslation"] >> cv_T; //std::cout << "cv_R:" << cv_R << std::endl; Eigen::Matrix3d eigen_R; Eigen::Vector3d eigen_T; cv::cv2eigen(cv_R, eigen_R); cv::cv2eigen(cv_T, eigen_T); Eigen::Quaterniond Q(eigen_R); eigen_R = Q.normalized(); RIC.push_back(eigen_R); TIC.push_back(eigen_T); ROS_INFO("Extrinsic_R : {}", RIC[0]); std::cout << "Extrinsic_R :" << std::endl << RIC[0] << std::endl; std::cout << "Extrinsic_T :" << std::endl << TIC[0] << std::endl; //ROS_INFO("Extrinsic_T : ", TIC[0].transpose()); } INIT_DEPTH = 5.0; BIAS_ACC_THRESHOLD = 0.1; BIAS_GYR_THRESHOLD = 0.1; TD = fsSettings["td"]; ESTIMATE_TD = fsSettings["estimate_td"]; if (ESTIMATE_TD) ROS_INFO("Unsynchronized sensors, online estimate time offset, initial td: ", TD); else ROS_INFO("Synchronized sensors, fix time offset: ", TD); ROLLING_SHUTTER = fsSettings["rolling_shutter"]; if (ROLLING_SHUTTER) { TR = fsSettings["rolling_shutter_tr"]; ROS_INFO("rolling shutter camera, read out time per line: ", TR); } else { TR = 0; } fsSettings.release(); }试图读取的yaml文件如下:

%YAML:1.0 #common parameters imu_topic: "/imu0" image_topic: "/cam0/image_raw" output_path: "/home/ylab/output/" #camera calibration model_type: PINHOLE camera_name: camera image_width: 752 image_height: 480 distortion_parameters: k1: -2.917e-01 k2: 8.228e-02 p1: 5.333e-05 p2: -1.578e-04 projection_parameters: fx: 4.616e+02 fy: 4.603e+02 cx: 3.630e+02 cy: 2.481e+02 # Extrinsic parameter between IMU and Camera. estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it. # 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess. # 2 Don't know anything about extrinsic parameters. You don't need to give R,T. We will try to calibrate it. Do some rotation movement at beginning. #If you choose 0 or 1, you should write down the following matrix. #Rotation from camera frame to imu frame, imu^R_cam extrinsicRotation: !!opencv-matrix rows: 3 cols: 3 dt: d data: [0.0148655429818, -0.999880929698, 0.00414029679422, 0.999557249008, 0.0149672133247, 0.025715529948, -0.0257744366974, 0.00375618835797, 0.999660727178] #Translation from camera frame to imu frame, imu^T_cam extrinsicTranslation: !!opencv-matrix rows: 3 cols: 1 dt: d data: [-0.0216401454975,-0.064676986768, 0.00981073058949] #feature traker paprameters max_cnt: 150 # max feature number in feature tracking min_dist: 30 # min distance between two features freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image F_threshold: 1.0 # ransac threshold (pixel) show_track: 1 # publish tracking image as topic equalize: 1 # if image is too dark or light, trun on equalize to find enough features fisheye: 0 # if using fisheye, trun on it. A circle mask will be loaded to remove edge noisy points #optimization parameters max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time max_num_iterations: 8 # max solver itrations, to guarantee real time keyframe_parallax: 10.0 # keyframe selection threshold (pixel) #imu parameters The more accurate parameters you provide, the better performance acc_n: 0.08 # accelerometer measurement noise standard deviation. #0.2 0.04 gyr_n: 0.004 # gyroscope measurement noise standard deviation. #0.05 0.004 acc_w: 0.00004 # accelerometer bias random work noise standard deviation. #0.02 gyr_w: 2.0e-6 # gyroscope bias random work noise standard deviation. #4.0e-5 g_norm: 9.81007 # gravity magnitude #loop closure parameters loop_closure: 1 # start loop closure load_previous_pose_graph: 0 # load and reuse previous pose graph; load from 'pose_graph_save_path' fast_relocalization: 0 # useful in real-time and large project pose_graph_save_path: "/home/ylab/output/pose_graph/" # save and load path #unsynchronization parameters estimate_td: 0 # online estimate time offset between camera and imu td: 0.0 # initial value of time offset. unit: s. readed image clock + td = real image clock (IMU clock) #rolling shutter parameters rolling_shutter: 0 # 0: global shutter camera, 1: rolling shutter camera rolling_shutter_tr: 0 # unit: s. rolling shutter read out time per frame (from data sheet). #visualization parameters save_image: 1 # save image in pose graph for visualization prupose; you can close this function by setting 0 visualize_imu_forward: 0 # output imu forward propogation to achieve low latency and high frequence results visualize_camera_size: 0.4 # size of camera marker in RVIZ